This post is a text summary from Chetan Surpur’s slides:

Overview

SDR is distributed and sparse, which means each bit has semantic meaning and few bits are on, more are off.

CLA is an algorithm that describes the operation of a single layer of cortical neurons. The pattern can either be spatial or temporal. Each column represents some meaning, and each cell represents the same meaning to the column but in different context (this is the key of learning high-order sequences). Learning occurs by forming and un-forming connections between neurons (cells).

Spatial Pooler

Spatial pooler maps a non-sparse representation of bits to SDR, and unions similar inputs.

Input: a vector of bits, can be the output of an encoder, or the cells in a previous layer.

Output: activation of columns.

Each cell in a column behaves identically, and the whole column is connected to inputs. Each column is mapped to a subset of input bits. More bits on, more likely the column becomes activated. The receptive field and percentage threshold of connections are controlled by potentialRadius and potentialPct. potentialPct helps adjacent columns learn to represent different inputs.

Permanence represents the potential for a connection to be formed between column and input. Minimum “connect” threshold: synPermConnected There are several ways to initialize the permanence of a cell: Gaussian, Random, etc.

To force the output to be sparse, local inhibition is used to columns within a inhibition radius. The radius is dynamic with the growth and death of connections. It’s estimated with the average range of inputs that each column is connected to, and the average number of columns each input is connected to.

Compute

- For each column, compute an “overlap score” that represents how many input bits are “actively connected” to the column.

- Boost if needed.

- Check inhibition: for each column, look at the neighboring columns within inhibition radius

- Keep the top N columns by overlap score

N is chosen to achieve a desired sparsity(

localAreaDensity,numActiveColumnsPerInhArea)123456789101112131415161718192021222324252627282930def _inhibitColumns(self, overlaps):"""Performs inhibition. This method calculates the necessary values needed toactually perform inhibition and then delegates the task of picking theactive columns to helper functions.Parameters:----------------------------:param overlaps: an array containing the overlap score for each column.The overlap score for a column is defined as the numberof synapses in a "connected state" (connected synapses)that are connected to input bits which are turned on."""# determine how many columns should be selected in the inhibition phase.# This can be specified by either setting the 'numActiveColumnsPerInhArea'# parameter or the 'localAreaDensity' parameter when initializing the classif (self._localAreaDensity > 0):density = self._localAreaDensityelse:inhibitionArea = ((2*self._inhibitionRadius + 1)** self._columnDimensions.size)inhibitionArea = min(self._numColumns, inhibitionArea)density = float(self._numActiveColumnsPerInhArea) / inhibitionAreadensity = min(density, 0.5)if self._globalInhibition or \self._inhibitionRadius > max(self._columnDimensions):return self._inhibitColumnsGlobal(overlaps, density)else:return self._inhibitColumnsLocal(overlaps, density)Output of SP

Learning(optional): Hebbian learning: strengthen connections to active input, and weaken connections to inactive input. And any column that becomes so weakly connected to its potential inputs that it can never become active in the future has all its connections strengthened (to keep it from dying)

Boost(optional): Strengthen connections of columns who don’t have enough overlap with their inputs (relevant parameters: minOverlapDutyCycles, synPermBelowStimulusInc). Get ready to boost in the next cycle those columns that aren’t active enough (relevant parameter: minActiveDutyCycles)

Finally, update inhibition radius.

Temporal Pooler

Temporal pooler learns transitions of SDRs. Both SP and TP operate on the same layer of cortical neurons.

Input: state of cells from last timestep and activation of columns from the current timestep.

Output: an activation of cells within columns, representing a selection of a particular context, and a prediction of cells. Predicted columns=>next predicted SDR, predicted cells=>context

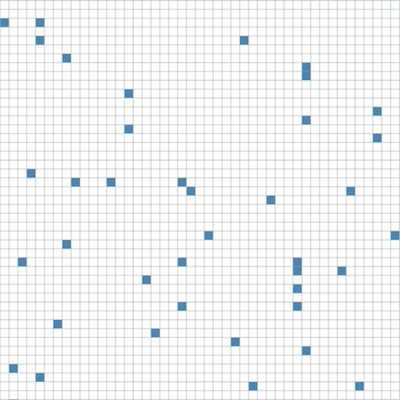

Sparse Distributed Representation (SDR) is one of the fundamentals of HTM system. SDR can be representad as n-dimensional binary vector, in which only a small percentage of components are 1. ## 1. Biological Evidence

Sparse Distributed Representation (SDR) is one of the fundamentals of HTM system. SDR can be representad as n-dimensional binary vector, in which only a small percentage of components are 1. ## 1. Biological Evidence